Purpose: For education purposes only. The code demonstrates supervised learning task using a very simple neural network. In my next post, I am going to replace the vast majority of subroutines with CUDA kernels.

Reference: Andrew Trask‘s post.

The core component of the code, the learning algorithm, is only 10 lines:

| int main(int argc, const char * argv[]) { | |

| for (unsigned i = 0; i != 50; ++i) { | |

| vector<float> pred = sigmoid(dot(X, W, 4, 4, 1 ) ); | |

| vector<float> pred_error = y - pred; | |

| vector<float> pred_delta = pred_error * sigmoid_d(pred); | |

| vector<float> W_delta = dot(transpose( &X[0], 4, 4 ), pred_delta, 4, 4, 1); | |

| W = W + W_delta; | |

| }; | |

| return 0; | |

| } |

The loop above runs for 50 iterations (epochs) and fits the vector of attributes X to the vector of classes y through the vector of weights W. I am going to use 4 records from Iris flower dataset. The attributes (X) are sepal length, sepal width, petal length, and petal width. In my example, I have 2 (Iris Setosa (0) and Iris Virginica (1)) of 3 classes you can find in the original dataset. Predictions are stored in vector pred.

Neural network architecture. Values of vectors W and pred change over the course of training the network, while vectors X and y must not be changed:

| X W pred y | |

| 5.1 3.5 1.4 0.2 0.5 0.00 0 | |

| 4.9 3.0 1.4 0.2 0.5 0.00 0 | |

| 6.2 3.4 5.4 2.3 0.5 0.99 1 | |

| 5.9 3.0 5.1 1.8 0.5 0.99 1 |

The size of matrix X is the size of the batch by the number of attributes.

Line 3. Make predictions:

| vector pred = sigmoid(dot(X, W, 4, 4, 1 ) ); |

In order to calculate predictions, first of all, we will need to multiply a 4 x 4 matrix X by a 4 x 1 matrix W. Then, we will need to apply an activation function; in this case, we will use a sigmoid function.

A subroutine for matrix multiplication:

| vector <float> dot (const vector <float>& m1, const vector <float>& m2, | |

| const int m1_rows, const int m1_columns, const int m2_columns) { | |

| /* Returns the product of two matrices: m1 x m2. | |

| Inputs: | |

| m1: vector, left matrix of size m1_rows x m1_columns | |

| m2: vector, right matrix of size m1_columns x m2_columns | |

| (the number of rows in the right matrix must be equal | |

| to the number of the columns in the left one) | |

| m1_rows: int, number of rows in the left matrix m1 | |

| m1_columns: int, number of columns in the left matrix m1 | |

| m2_columns: int, number of columns in the right matrix m2 | |

| Output: vector, m1 * m2, product of two vectors m1 and m2, | |

| a matrix of size m1_rows x m2_columns | |

| */ | |

| vector <float> output (m1_rows*m2_columns); | |

| for( int row = 0; row != m1_rows; ++row ) { | |

| for( int col = 0; col != m2_columns; ++col ) { | |

| output[ row * m2_columns + col ] = 0.f; | |

| for( int k = 0; k != m1_columns; ++k ) { | |

| output[ row * m2_columns + col ] += m1[ row * m1_columns + k ] * m2[ k * m2_columns + col ]; | |

| } | |

| } | |

| } | |

| return output; | |

| } |

A subroutine for the sigmoid function:

| vector <float> sigmoid (const vector <float>& m1) { | |

| /* Returns the value of the sigmoid function f(x) = 1/(1 + e^-x). | |

| Input: m1, a vector. | |

| Output: 1/(1 + e^-x) for every element of the input matrix m1. | |

| */ | |

| const unsigned long VECTOR_SIZE = m1.size(); | |

| vector <float> output (VECTOR_SIZE); | |

| for( unsigned i = 0; i != VECTOR_SIZE; ++i ) { | |

| output[ i ] = 1 / (1 + exp(-m1[ i ])); | |

| } | |

| return output; | |

| } |

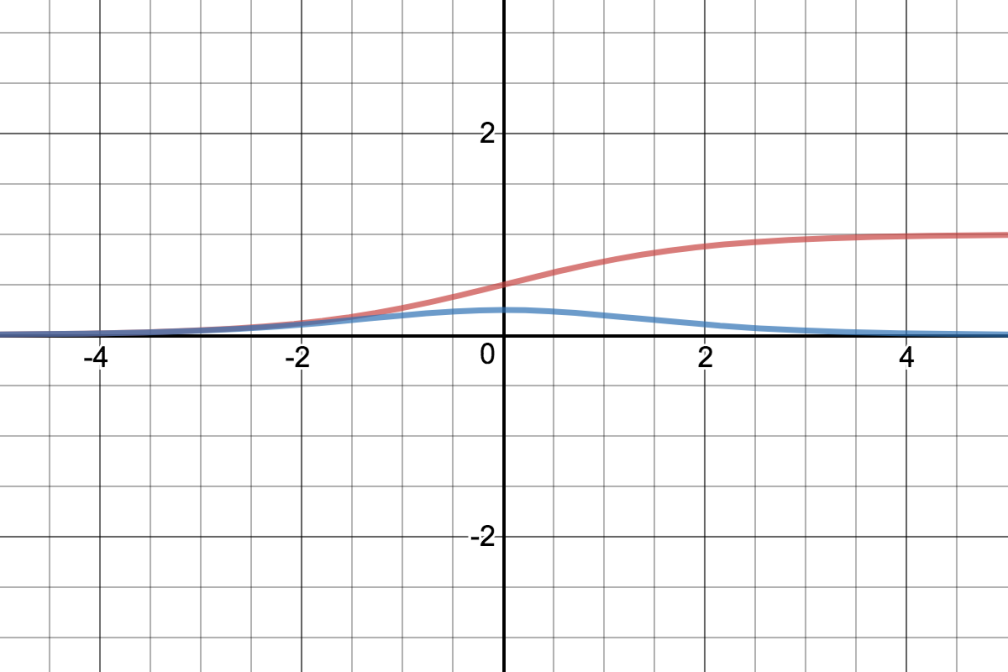

Sigmoid function (red) and its first derivative (blue graph):

Line 4. Calculate pred_error, it is simply a difference between the predictions and the truth:

| vector<float> pred_error = y - pred; |

In order to subtract one vector from another, we will need to overload the “-” operator:

| vector <float> operator-(const vector <float>& m1, const vector <float>& m2){ | |

| /* Returns the difference between two vectors. | |

| Inputs: | |

| m1: vector | |

| m2: vector | |

| Output: vector, m1 - m2, difference between two vectors m1 and m2. | |

| */ | |

| const unsigned long VECTOR_SIZE = m1.size(); | |

| vector <float> difference (VECTOR_SIZE); | |

| for (unsigned i = 0; i != VECTOR_SIZE; ++i){ | |

| difference[i] = m1[i] - m2[i]; | |

| }; | |

| return difference; | |

| } |

Line 5. Determine the vector of deltas pred_delta:

| vector<float> pred_delta = pred_error * sigmoid_d(pred); |

In order to perform elemetwise multiplicaton of two vectors, we will need to overload the “*” operator:

| vector <float> operator*(const vector <float>& m1, const vector <float>& m2){ | |

| /* Returns the product of two vectors (elementwise multiplication). | |

| Inputs: | |

| m1: vector | |

| m2: vector | |

| Output: vector, m1 * m2, product of two vectors m1 and m2 | |

| */ | |

| const unsigned long VECTOR_SIZE = m1.size(); | |

| vector <float> product (VECTOR_SIZE); | |

| for (unsigned i = 0; i != VECTOR_SIZE; ++i){ | |

| product[i] = m1[i] * m2[i]; | |

| }; | |

| return product; | |

| } |

A subroutine for the derivative of the sigmoid function (d_sigmoid):

Basically, we use the first derivative to find the slope of the line tangent to the graph of the sigmoid function. At x = 0 the slope equals to 0.25. The further the prediction is from 0, the closer the slope is to 0: at x = ±10 the slope equals to 0.000045. Hence, the deltas will be small if either the error is small or the network is very confident about its prediction (i.e. abs(x) is greater than 4).

| vector <float> sigmoid_d (const vector <float>& m1) { | |

| /* Returns the value of the sigmoid function derivative f'(x) = f(x)(1 - f(x)), | |

| where f(x) is sigmoid function. | |

| Input: m1, a vector. | |

| Output: x(1 - x) for every element of the input matrix m1. | |

| */ | |

| const unsigned long VECTOR_SIZE = m1.size(); | |

| vector <float> output (VECTOR_SIZE); | |

| for( unsigned i = 0; i != VECTOR_SIZE; ++i ) { | |

| output[ i ] = m1[ i ] * (1 - m1[ i ]); | |

| } | |

| return output; | |

| } |

Line 6. Calculate W_delta:

This line computes weight updates. In order to do that, we need to perform matrix multiplication of transposed matrix X by matrix pred_delta.

| vector W_delta = dot(transpose( &X[0], 4, 4 ), pred_delta, 4, 4, 1); |

The subroutine that transposes matrices:

| vector <float> transpose (float *m, const int C, const int R) { | |

| /* Returns a transpose matrix of input matrix. | |

| Inputs: | |

| m: vector, input matrix | |

| C: int, number of columns in the input matrix | |

| R: int, number of rows in the input matrix | |

| Output: vector, transpose matrix mT of input matrix m | |

| */ | |

| vector <float> mT (C*R); | |

| for(int n = 0; n!=C*R; n++) { | |

| int i = n/C; | |

| int j = n%C; | |

| mT[n] = m[R*j + i]; | |

| } | |

| return mT; | |

| } |

Line 7. Update the weights W:

| W = W + W_delta; |

In order to perform matrix addition operation, we need to overload the “+” operator:

| vector <float> operator+(const vector <float>& m1, const vector <float>& m2){ | |

| /* Returns the elementwise sum of two vectors. | |

| Inputs: | |

| m1: a vector | |

| m2: a vector | |

| Output: a vector, sum of the vectors m1 and m2. | |

| */ | |

| const unsigned long VECTOR_SIZE = m1.size(); | |

| vector <float> sum (VECTOR_SIZE); | |

| for (unsigned i = 0; i != VECTOR_SIZE; ++i){ | |

| sum[i] = m1[i] + m2[i]; | |

| }; | |

| return sum; | |

| } |

Complete code:

| // | |

| // main.cpp | |

| // mlperceptron | |

| // | |

| // Created by Sergei Bugrov on 7/1/17. | |

| // Copyright © 2017 Sergei Bugrov. All rights reserved. | |

| // | |

| #include <iostream> | |

| #include <vector> | |

| #include <math.h> | |

| using std::vector; | |

| using std::cout; | |

| using std::endl; | |

| vector<float> X { | |

| 5.1, 3.5, 1.4, 0.2, | |

| 4.9, 3.0, 1.4, 0.2, | |

| 6.2, 3.4, 5.4, 2.3, | |

| 5.9, 3.0, 5.1, 1.8 | |

| }; | |

| vector<float> y { | |

| 0, | |

| 0, | |

| 1, | |

| 1 }; | |

| vector<float> W { | |

| 0.5, | |

| 0.5, | |

| 0.5, | |

| 0.5}; | |

| vector <float> sigmoid_d (const vector <float>& m1) { | |

| /* Returns the value of the sigmoid function derivative f'(x) = f(x)(1 - f(x)), | |

| where f(x) is sigmoid function. | |

| Input: m1, a vector. | |

| Output: x(1 - x) for every element of the input matrix m1. | |

| */ | |

| const unsigned long VECTOR_SIZE = m1.size(); | |

| vector <float> output (VECTOR_SIZE); | |

| for( unsigned i = 0; i != VECTOR_SIZE; ++i ) { | |

| output[ i ] = m1[ i ] * (1 - m1[ i ]); | |

| } | |

| return output; | |

| } | |

| vector <float> sigmoid (const vector <float>& m1) { | |

| /* Returns the value of the sigmoid function f(x) = 1/(1 + e^-x). | |

| Input: m1, a vector. | |

| Output: 1/(1 + e^-x) for every element of the input matrix m1. | |

| */ | |

| const unsigned long VECTOR_SIZE = m1.size(); | |

| vector <float> output (VECTOR_SIZE); | |

| for( unsigned i = 0; i != VECTOR_SIZE; ++i ) { | |

| output[ i ] = 1 / (1 + exp(-m1[ i ])); | |

| } | |

| return output; | |

| } | |

| vector <float> operator+(const vector <float>& m1, const vector <float>& m2){ | |

| /* Returns the elementwise sum of two vectors. | |

| Inputs: | |

| m1: a vector | |

| m2: a vector | |

| Output: a vector, sum of the vectors m1 and m2. | |

| */ | |

| const unsigned long VECTOR_SIZE = m1.size(); | |

| vector <float> sum (VECTOR_SIZE); | |

| for (unsigned i = 0; i != VECTOR_SIZE; ++i){ | |

| sum[i] = m1[i] + m2[i]; | |

| }; | |

| return sum; | |

| } | |

| vector <float> operator-(const vector <float>& m1, const vector <float>& m2){ | |

| /* Returns the difference between two vectors. | |

| Inputs: | |

| m1: vector | |

| m2: vector | |

| Output: vector, m1 - m2, difference between two vectors m1 and m2. | |

| */ | |

| const unsigned long VECTOR_SIZE = m1.size(); | |

| vector <float> difference (VECTOR_SIZE); | |

| for (unsigned i = 0; i != VECTOR_SIZE; ++i){ | |

| difference[i] = m1[i] - m2[i]; | |

| }; | |

| return difference; | |

| } | |

| vector <float> operator*(const vector <float>& m1, const vector <float>& m2){ | |

| /* Returns the product of two vectors (elementwise multiplication). | |

| Inputs: | |

| m1: vector | |

| m2: vector | |

| Output: vector, m1 * m2, product of two vectors m1 and m2 | |

| */ | |

| const unsigned long VECTOR_SIZE = m1.size(); | |

| vector <float> product (VECTOR_SIZE); | |

| for (unsigned i = 0; i != VECTOR_SIZE; ++i){ | |

| product[i] = m1[i] * m2[i]; | |

| }; | |

| return product; | |

| } | |

| vector <float> transpose (float *m, const int C, const int R) { | |

| /* Returns a transpose matrix of input matrix. | |

| Inputs: | |

| m: vector, input matrix | |

| C: int, number of columns in the input matrix | |

| R: int, number of rows in the input matrix | |

| Output: vector, transpose matrix mT of input matrix m | |

| */ | |

| vector <float> mT (C*R); | |

| for(unsigned n = 0; n != C*R; n++) { | |

| unsigned i = n/C; | |

| unsigned j = n%C; | |

| mT[n] = m[R*j + i]; | |

| } | |

| return mT; | |

| } | |

| vector <float> dot (const vector <float>& m1, const vector <float>& m2, const int m1_rows, const int m1_columns, const int m2_columns) { | |

| /* Returns the product of two matrices: m1 x m2. | |

| Inputs: | |

| m1: vector, left matrix of size m1_rows x m1_columns | |

| m2: vector, right matrix of size m1_columns x m2_columns (the number of rows in the right matrix | |

| must be equal to the number of the columns in the left one) | |

| m1_rows: int, number of rows in the left matrix m1 | |

| m1_columns: int, number of columns in the left matrix m1 | |

| m2_columns: int, number of columns in the right matrix m2 | |

| Output: vector, m1 * m2, product of two vectors m1 and m2, a matrix of size m1_rows x m2_columns | |

| */ | |

| vector <float> output (m1_rows*m2_columns); | |

| for( int row = 0; row != m1_rows; ++row ) { | |

| for( int col = 0; col != m2_columns; ++col ) { | |

| output[ row * m2_columns + col ] = 0.f; | |

| for( int k = 0; k != m1_columns; ++k ) { | |

| output[ row * m2_columns + col ] += m1[ row * m1_columns + k ] * m2[ k * m2_columns + col ]; | |

| } | |

| } | |

| } | |

| return output; | |

| } | |

| void print ( const vector <float>& m, int n_rows, int n_columns ) { | |

| /* "Couts" the input vector as n_rows x n_columns matrix. | |

| Inputs: | |

| m: vector, matrix of size n_rows x n_columns | |

| n_rows: int, number of rows in the left matrix m1 | |

| n_columns: int, number of columns in the left matrix m1 | |

| */ | |

| for( int i = 0; i != n_rows; ++i ) { | |

| for( int j = 0; j != n_columns; ++j ) { | |

| cout << m[ i * n_columns + j ] << " "; | |

| } | |

| cout << '\n'; | |

| } | |

| cout << endl; | |

| } | |

| int main(int argc, const char * argv[]) { | |

| for (unsigned i = 0; i != 50; ++i) { | |

| vector<float> pred = sigmoid(dot(X, W, 4, 4, 1 ) ); | |

| vector<float> pred_error = y - pred; | |

| vector<float> pred_delta = pred_error * sigmoid_d(pred); | |

| vector<float> W_delta = dot(transpose( &X[0], 4, 4 ), pred_delta, 4, 4, 1); | |

| W = W + W_delta; | |

| if (i == 49){ | |

| print ( pred, 4, 1 ); | |

| }; | |

| }; | |

| return 0; | |

| } |

Output:

| 0.0511965 | |

| 0.0696981 | |

| 0.931842 | |

| 0.899579 | |

| Program ended with exit code: 0 |

great job! but could you please check transpose function. If I’m not wrong

mT[n] = m[R*j + i]; must be mT[R*j + i] = m[n];

LikeLike

Hi, i copied your code after understanding it and try to run in dev c++ but it shows so many errors like this: X must be initialize by constructor not by {…}, and extended initializer lists only available with -std=c++11 or -std=gnu++11 and there are so many more.. please help me

LikeLike

Yeah, it must be the version of the compiler. I tested the code with c++11. I guess if you can rewrite the code in the way that works for your compiler, you would learn much more and in detail. Otherwise, you can use the compliment command in the comment at the very top of the code.

LikeLike

nice code. why did you pass in the X vector as a pointer in the transpose func?

LikeLike

i would love to read this, but the advertisement keeps making the page scroll to the bottom…

LikeLike

Try to read it here: https://translate.google.com/translate?sl=ru&tl=en&js=y&prev=_t&hl=en&ie=UTF-8&u=https%3A%2F%2Fcognitivedemons.wordpress.com%2F2017%2F07%2F06%2Fa-neural-network-in-10-lines-of-c-code%2F&edit-text=&act=url

LikeLike